Originally published on StateStreet.Listen

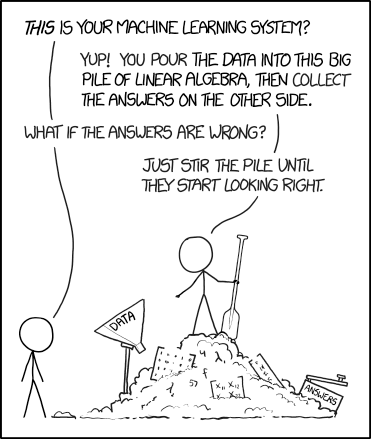

Copious amounts of data (2.5 quintillion bytes daily and growing) are created, digested, crunched, structured and ultimately delivered on the other side of algorithms to form the foundation of AI and machine-learning tools. How a machine actually learns is incredibly complicated, but in theory, AI augments, or in some instances replaces, human intelligence when it comes to solving problems — raw data, complex math, intricately coded computer programs and more all form the basis of a very technical process.

But in reality, this process isn’t as clinical as it may seem. Because data collection and selection is done by humans, biases such as political leanings, gender stereotypes, race considerations and more can creep in. Our very human (and often illogical) biases ultimately become encoded in a system that was meant to be completely impartial and unprejudiced. This hidden algorithmic bias is then reinforced every time the algorithm runs and actually becomes a key part of how the machine learns.

This is one of the fundamental questions about AI. It’s not just about incorporating smarter machine learning, nor about the most sophisticated math being applied to assess risk profiles, market trends or even identifying transaction anomalies that predict fraud. It is: How do we make strong AI without bias?

The answer? Diversity.

Just as a more diverse workforce makes for a better and more profitable business world, diversity can also make for better AI.

How do we make strong AI without bias?

There are three types of algorithmic biases we need to be aware of as we build our intelligent technologies. The first is pre-existing bias, which is a consequence of underlying social and institutional ideologies. It preserves ‘status-quo’ biases (gendered norms or Euro-centric perspectives, for instance) and replicates that bias into all future uses of the algorithm. It’s almost impossible to ask the necessary questions and challenge the status-quo assumptions we have around human behavior if we don’t have a diverse group of people at the table. Age. Gender. Race. Socioeconomic background. Education. Even neurodiversity — the more perspectives and backgrounds we pull together, the more likely we are to catch and cull pre-existing bias from our data sets.

Another type of algorithmic bias is technical. Due to limitations of a program, computational power, design or other constraints on the system, technical bias happens when we attempt to formalize computing decisions into concrete steps with the assumption that human behavior will correlate. Bias is particularly problematic with structured data. We need structured data to give machines context and content understanding (think search engines or FAQ responses for chatbots). Yet all structured data is touched by humans, making the structure subject to qualitative categorization, which can be highly subjective, especially when that data is attached to particular social, political and legal norms.

Finally, there’s emergent bias, which results from a reliance on old algorithms across new or unanticipated contexts. What does that mean? New information types such as new regulations or laws, business models or even shifting cultural norms may be incorporated into old algorithms that were not trained to evaluate the data properly. New information may even be completely ignored because it doesn’t look like what the machine was programmed to recognize. More diversity in the team selecting data that the machine learns from means a higher probability that this data will span a more diverse range of features. And that makes for better, less biased output.

To ensure we’re building the most effective AI solutions, diversity is the best insurance policy we have — diversity of teams and data, and rigorous questioning of our own assumptions. As long as humans are creating the algorithms powering AI and machine learning, and as long as humans are qualitatively structuring the data we use to train the systems, the possibility for biased outcomes exists. Diversity is the antidote to hidden algorithmic bias.